Here is a recent shower thought:

It is usually said that the colors of noise are inspired by the spectral distributions of corresponding colors of light. For example, the ‘white’ in white noise is an allusion to white light which is thought to have a (mostly) flat spectrum. But is this so? How does white light actually look like, as an electromagnetic wave?

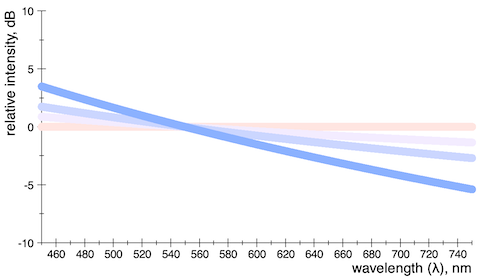

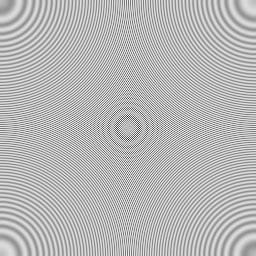

So I made following figure which shows some power-law distributions in the visible range of wavelengths colored by their theoretical appearance:

Fig 1: Spectral distributions with exponents from 0 to −4.

The color of the flat line is also known as Standard Illuminant E – or equal-energy white light. Compared to the D65 white background it has a rather pinkish appearance with a correlated color temperature of about 5500 K.

I’m going to make a case for this later. But first things first.

I’m going to make a case for this later. But first things first.