Today I am going to share a discovery that might not be newsworthy for many people, but for me it seemed somewhat scanadalous at first. Could it really be true that an oversight of this kind slips through the cracks and makes it to the front page of publicly released NASA pictures? Apparently yes. This talk is about missing gamma correction in some space images which therefore give an unrealistic appearance. This issue seems to exist on top of the color-filter issue (where the imaging instruments mostly do not have spectral sensitivities that correspond to human vision) and results in a distortion of brightness relationships between objects. Extra caution is therefore advised when using these images as a reference for artistic purposes.

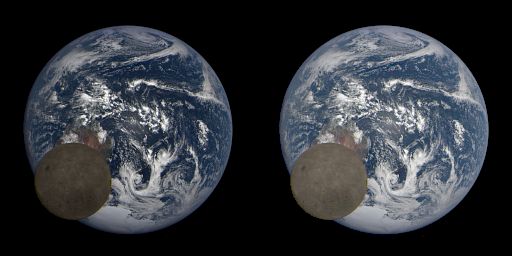

The Lunar Transit picture

Lunar transit as captured by the EPIC camera on board of the Deep Space Climate Observatory. Left: image as published; right: corrected.

I remember how in 2015 an image of a lunar transit taken by the Earth Polychromatic Imaging Camera (EPIC) made rounds in several twitter threads. These transits happen regularly, the latest one being from february this year. There’s just one problem with these images as originally published on the NASA website: they’re too dark. As if somebody took the files with the linear photon counts from the scientific instruments, and threw them together to make the images while forgetting to account for display gamma.

I’m going to make a case for this later. But first things first.

I’m going to make a case for this later. But first things first.